NodeJS can be used to automate data transformation and downloading. For example, I recently had over 14,000 lines of code staring back at me, and I contemplated how I was going to access certain nested photo URLs within that code. Still, after finding some solution to accessing the URLs, I had to somehow download a total of 700 images from Pexels.com — a website that provides free images.

In situations like this, it’s great to know how to automate a data cleaning and downloading process. Node’s fs (file system) module became a perfect solution to this problem for cleaning the data, writing this clean data to a new file, then automating 700 photo downloads — one can even automate the creation of separate directories like I did (100 directories with 7 photos each).

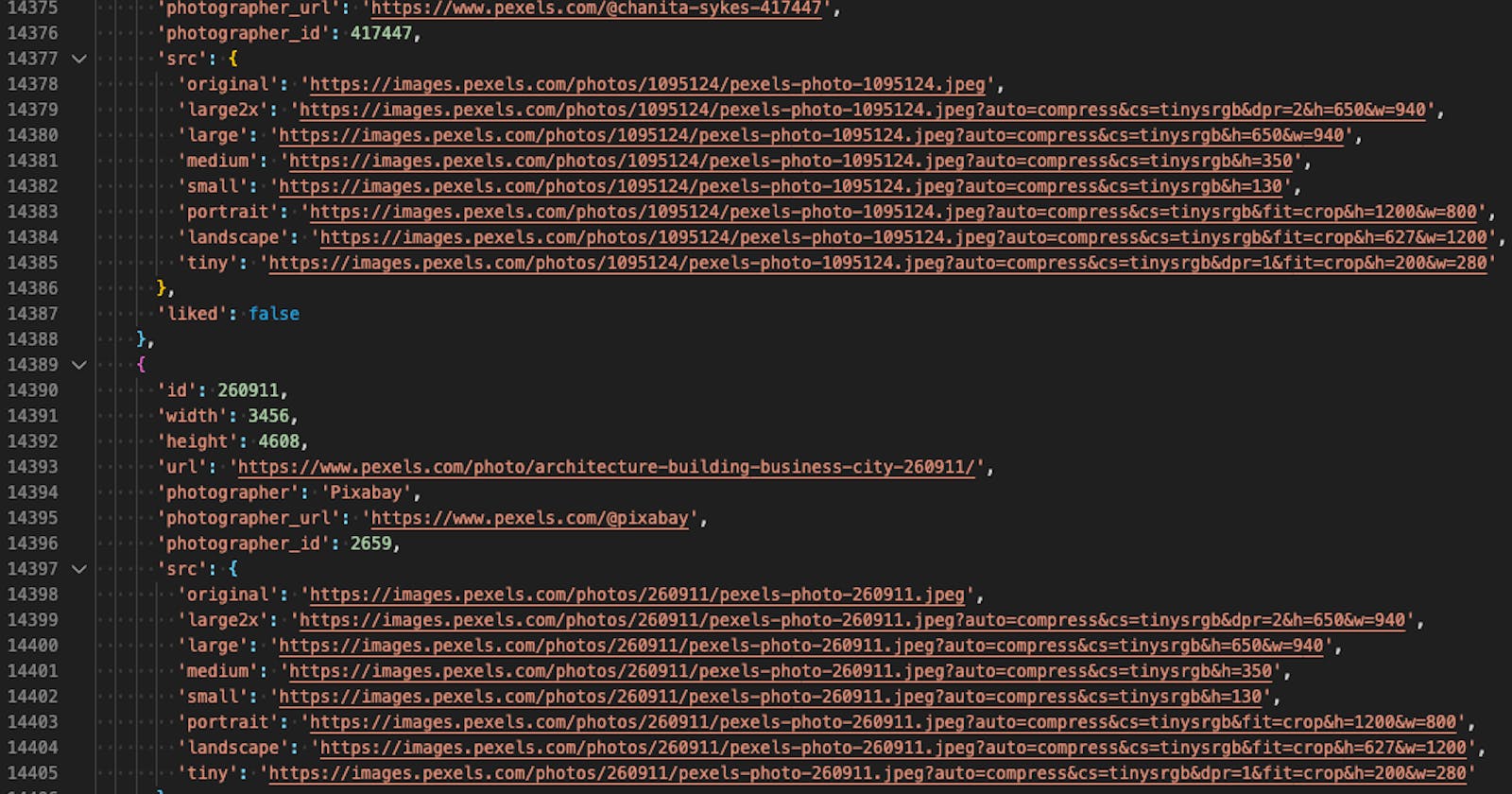

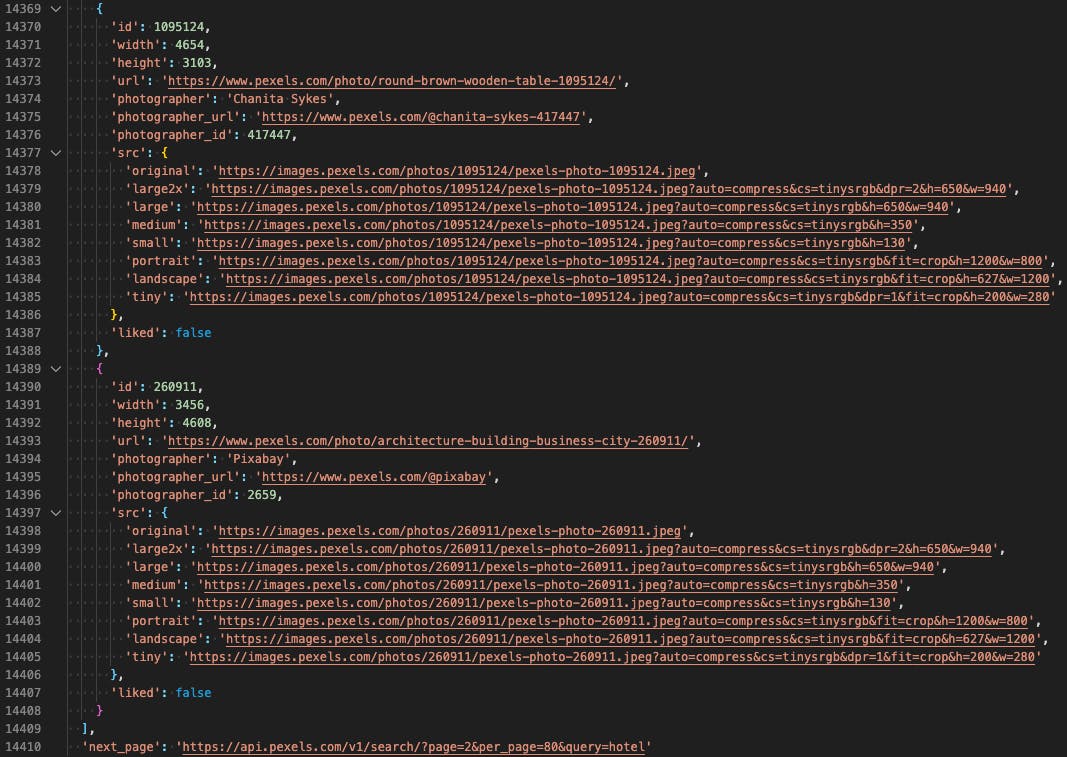

To begin, I investigated the raw data to find common keys for the URLs that I needed.

I decided on the unique id and the nested src.large photo URL. With a simple forEach loop, I iterated over the raw data, and with each iteration, I pushed a new object containing the key/value pairs for id (the unique identifier) and scr.large (the URL) to an array.

After the forEach loop, I used fs.writeFile. This method accepts a named location for the new file (I used "cleanAPIdata.json"), the content to write to that file (in this case, the array of objects), and a callback which I used to handle any unexpected errors in addition to some terminal feedback upon success.

const cleanAndOutputToNewFile = json => {

let photos = json.photos;

let resultsArray = [];

photos.forEach((photo, i) => {

let temp = {};

temp.id = i;

temp.pexelId = photo.id;

temp.photo = photo.src.large;

resultsArray.push(temp);

});

fs.writeFile(path.join(__dirname, 'cleanAPIdata.json'), JSON.stringify(resultsArray), err => {

if (err) {

console.error(err);

} else {

console.log('success!');

}

});

};

cleanAndOutputToNewFile(pexels);

Armed with a freshly-created JSON file, my next logical step was to prepare my file system to accept all the photos I was about to download. Node’s fs.mkdir method allowed me to use a for-loop to create 100 directories by passing in arguments for the directory location (I used images/${i}, but make sure the images directory already exists), and a callback to handle any unexpected errors. If you’re curious as to why I didn’t provide any feedback upon success, it’s because I could see in my file system if the directories were made — so, that became the feedback I needed.

const makeDirectories = () => {

for (let i = 1; i <= 100; i++) {

fs.mkdir(`../images/${i}`, err => {

if (err) {

console.error(err);

}

});

}

};

makeDirectories();

Now the fun part — making HTTP requests to download each photo. I used Axios with a response type of stream as an option. This option is part of an options object that can be passed in to the Axios request. For example,

{

method: "GET",

url: "an-individual-pexel-photo-url",

responseType: "stream"

}

The stream option indicates the type of response I was requesting from the server.

But what is a stream? A stream is an efficient way to interact with data (usually a large amount of data, like media — think streaming movies from Netflix) where the data are sent in chunks so a user doesn’t have to wait for the entire asset to load into memory. As such, a user or a program can begin to interact with the data sooner. Thus, streams are both memory efficient and time efficient.

Since Axios returns a Promise, once the response stream began, I used a .then method on the Promise to start piping the readable stream into a writable stream. To clarify, with the server’s stream response, I was able to implement the following concept: response.data.pipe(writable stream) where response was the server’s response object, and data was the property containing the requested data.

As for pipe, according to Node’s docs, pipes:

limit the buffering of data to acceptable levels such that sources and destinations of differing speeds will not overwhelm the available memory.

It is essentially a handoff mechanism. The writable stream I mentioned earlier is the destination that Node is talking about — my photo file.

let folder = 1;

let imgCounter = 0;

const downloadImages = obj => {

let url = obj.photo;

axios({

method: 'GET',

url: url,

responseType: 'stream'

})

.then(res => {

res.data.pipe(fs.createWriteStream(`../images/${folder}/${imgCounter}.jpeg`));

imgCounter++;

if (imgCounter === 7) {

imgCounter = 0;

folder++;

}

})

.catch(console.error);

};

const startDownloadingImages = () => {

for (let i = 0; i < cleanAPIdata.length; i++) {

downloadImages(cleanAPIdata[i]);

}

};

startDownloadingImages();

To create the writable stream, I made use of Node’s fs.createWriteStream method. This method accepts a destination path which I used to specify that I was anticipating a jpeg file. So altogether, the line of code that makes use of the source and destination streams follows:

res.data.pipe(fs.createWriteStream(`../images/${folder}/${imgCounter}.jpeg`))

A template literal is used here because the 700 HTTP requests were made as part of a for-loop where I kept count of the number of photos, and once the counter reached 7, then I incremented the folder. The result was 7 photos in each of the 100 directories!

Though this is a powerful way to automate data conversion and downloading, it’s worth noting to be mindful of copyrights and search engine T&Cs. And, though writing code to access information on the internet may be protected by the first amendment, that doesn’t mean one is not violating any laws. For example, one company has recently become involved with a legal battle because they are scraping the internet for photos, even though the photos are publicly available; however, they are using those photos to create biometric facial recognition surveillance technology. So, be ethical in your decisions with this powerful knowledge.